ATD Blog

Measuring Training Success in Healthcare

Fri Jan 23 2015

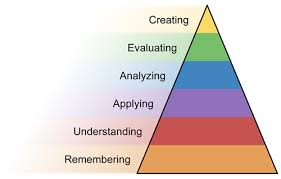

Using Bloom’s three domains as a guide, most designers engage the learner cognitively (critical thinking), as well as in the psychomotor domain (computer skills). The third domain, affective, is based on emotion and may be shaped by the clinician growing to value a new technology and believing it is worth using in their daily work life.

In Simpson’s expansion of Bloom’s psychomotor domain, use of the EHR software should be developed from the lower level of “perception” through “mechanism” to “complex overt response” and even “adaptation.” This response may be achieved through trial/error, so the clinician can become more confident and proficient using these skills.

But the technology alone will not suffice. This learning has to take place within the whole entity, psychomotor embedded within the cognitive domain where the clinician will be asked to use higher-level thinking, such as analysis, evaluation, and even creation.

Be Fair and Realistic

To be fair to the learner, measuring performance should include some sort of reality-based practice scenarios. You might think it takes too much time to develop these exercises, but giving the clinician a reserved block of time to complete realistic exercises before evaluation is the fair and constructive action to take.

What if you took your driving test before you took test drives? Likewise, what if clinicians are given access to the EHR in the live environment before they’ve practiced clusters of related skills that comprise a realistic situation? Only after providing a safe environment for practice with feedback—where the clinician will learn by trial and error—is it fair to measure performance.

But what about medical issues with severe consequences and serious problems that rarely occur or for which the response is not easily remembered? If such challenging situations have come up in the live environment, there were results to be measured—for better or worse.

L&D leaders will want to take a proactive approach and reinforce the desired behavior by providing a practice/feedback scenario either in a controlled training event, in the physician lounge, or by training rounds. By simulating the critical event and measuring the clinician’s response, you have proactively addressed measurable deficiencies and promoted a more safe outcome. (Note: Scenarios are multi-purpose and are quickly turned into a measurement checklist.)

Extend Learning beyond the Formal Activity

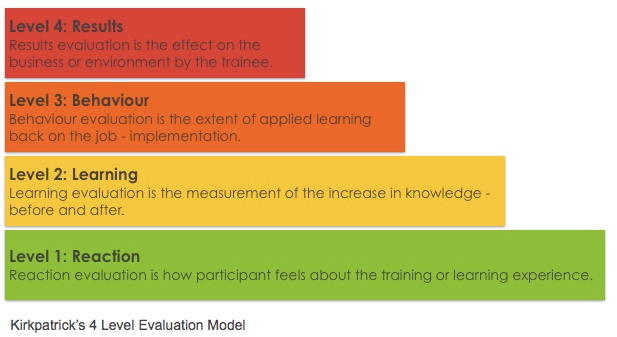

Most L&D professionals are already familiar with the Kirkpatrick model of training evaluation, or measuring training results, at specific stages. The graphic below summarizes the levels of this model.

In the high-stakes world of healthcare—faced with challenges like the spread of infectious disease, how do training professionals prove that required skills and knowledge have transferred to the work setting and are being applied effectively? While every L&D function designs and delivers training with varying degrees of success, few provide concrete evidence that their training is working. Are you measuring?

Make Sure with Hard Data

When asked how the organization will train clinicians on an IT update, the training manager may respond with “we’ll make sure” that the change is addressed. She may even state the method, such as web-based training (WBT), flyer, email blast, or a posting on the department webpage. This approach is vague and does nothing to raise the training department’s esteem. More importantly, it does not ensure that information is integrated into the clinician’s daily workflow.

How do you prove to the organization that you have accomplished an essential training task to meet organizational goals? It is insufficient simply to communicate a change without taking the final step: measuring.

In another example, a cutting-edge training department will prove—with concrete evidence—that clinicians are doing what is required. L&D's mission is to interact with learners and demonstrate the new skill, observe performance, document results, and finally report to the organization that competency has been achieved. Not only has L&D provided evidence that the skill is being applied, but also created a collaborative environment bridging the gap between clinicians on the busy floor and training professionals.

Or consider another scenario in which the IT department must create an alert to address the threat of a virus that is spread by travel from a particular area. To make sure everybody is using the functionality as intended (adults learners will resort to their own interpretation in a vacuum created by no direction) L&D makes training rounds to reinforce the desired behavior. As an added benefit to productivity, the learning leader discovers that the ambulatory unit in a new wing has poor connectivity. Although not strictly a training issue, he increases the training department’s impact by taking this issue back to IT for resolution. (Note: This type of interaction on the floor may lead to the discovery of the root cause of a persistent problem that has not been identified at a higher level.)

Meanwhile, some organizations rely heavily on their electronic health record (EHR) system. However, this is just a tool—albeit a vital one. It still requires the clinician to make decisions that exploit the advantageous potential of the technology. Using the software to document or view patient data in order to make a sound medical decision is just the beginning of the comprehensive training process.

Bottom line: Measuring on-the-job performance is the culmination. Let’s prepare learners for fair and consistent conditions for measuring.

Engage Hands and Heads

When hands are done tapping the keyboard, the head takes over on a higher level to analyze data, evaluate outcomes, and create a viable solution. For instance, how are L&D functions making sure that they have provided the clinician with the skill set to synthesize patient data from the EHR into higher levels of thinking to arrive at the most sound medical decision?

The answer: L&D needs to train—and then measure the whole set of skills, from psychomotor to cognitive and even affective, to determine our success.

It is probably safe to say that most organizations use Level 1 evaluations at the end of training in the form of a questionnaire, often referred to as a “smile sheet,” which asks respondents how well they liked the training. At the end of this training event, they might even evaluate what a clinician has learned with a skills-based test; this is Level 2. Hopefully, it is not a question/answer format, but something that requires the learner to actually complete a procedure on the computer.

But what about venturing into the real work setting where the skills are to be applied in an environment with a myriad of challenges like emergent events, multi-tasking, interruptions, and pressure from patients and peers. How well is the clinician using these newly acquired skills now, transferring them from the controlled setting to the chaotic hospital floor? That is where L&D measures at Level 3.

Indeed, it is a bold training departmen—wanting to prove its worth—that ventures into Level 3. In the T+D article, “The Hidden Power of Kirkpatrick’s Four Levels," Jim Kirkpatrick writes, “Level 3 is the forgotten evaluation level, yet it is the key to maximizing training and development effectiveness. After all, what is training and learning unless it is applied?”

The pressurized environment—where the ideal technology meets the reality of the daily grind—is the testing ground for training. Can your L&D function prove that it prepared the clinician with not only the step-by-step procedure, but also that it extended that experience to the higher levels of learning?

For example, when a clinician must place a medication order in response to an emergency, she selects the "urgent" detail in the EHR. Recognizing that nursing is busy in patient rooms and not sitting by the computer to receive this urgent order, she completes this medication order by communicating face-to-face with a nurse to alert them to the urgent medication order. This task requires the computer skill to place the urgent order, critical thinking to recognize it will not be seen in the required timeframe, and interpersonal skill to communicate the urgent order verbally.

Coach for Competency

L&D's challenge is to stretch training beyond the controlled training activity and make sure the clinician is using the newly acquired skills on the job. This stage begins at Level 2 (when the skills test is administered at the end of the formal training event) by using this data for dual purposes—both as baseline data for measuring performance and as an indicator of which clinicians will need more help before they achieve competency.

The training department, in collaboration with clinical stakeholders, is responsible for facilitating a standard that will determine quality patient care. What is acceptable performance that gives the clinician access to the software and what is below par that will require follow-up coaching?

For instance, when facilitating a structured computer lab, the trainer recognizes that learners have wide range of computer skills and medical experience, such as residents just out of medical school and well-seasoned physicians adjusting to the electronic environment. The learners with more computer experience fly through the practice scenarios and score well on the Level 2 evaluation; they will need no follow-up. However, the less computer savvy learners perform poorly on the evaluation, but they will likely be able to perform with more practice on the computer and at the elbow support.

This training approach takes the learning experience to the clinician. No longer does learning end after a formal training event; learning continues via coaching until competency is achieved and other obstacles to training are addressed.

Although hesitant about the extra person on the floor, a responsive manager will appreciate an intervention that helps their staff achieve competency. By distributing reports detailing the clinician’s continuing progress to managers and stakeholders, L&D will prove that training is working. As an added value of follow-up, training may also uncover persistent performance (non-training) problems to bring back to the stakeholders for resolution.

One challenge for L&D is digging until it finds the right sources of data to measure; learning leaders will need to be creative and persistent. The most effective way to collect data in such a dynamic environment will vary according to the structure of the organization, executive vision, and resources available.

But the basic collection methods include direct observation, interaction in the physician lounge, reports from peers and audits, and self-reporting by the clinician which, in a non-judgmental environment, is a very important reporting tool. This stage requires the buy-in of partners outside the training department, but a respectful working relationship and a well communicated evaluation plan with collection tools and method in place will go a long way to establish the collaborative atmosphere.

Proving that Training Matters

The stages of measurement from Level 1 to Level 3 are an important opportunity to impact the organization—from patient to clinician to organization—in a meaningful way. L&D can create a safe and respectful learning experience that continues well into application on the floor. More importantly, the data collected in a Level 3 evaluation is the baseline for the Level 4 evaluation of how well training met the targeted organizational goals.

This post has scratched the surface of a purposefully designed and accountable training program. Measurement may seem like the end of the process, but beginning with the end in mind (as recommended by Stephen R. Covey in The 7 Habits of Highly Effective People: Powerful Lessons in Personal Change) is an approach that pays off. Measuring what you L&D function is doing now will pave the way for delivering effective and accountable training in the future.

If this approach to proving how invaluable training is to organizational goals makes you uncomfortable, that is a good thing. You know you are taking a bold step to grow and flex your professional might.