TD Magazine Article

Member Benefit

Build a Learning Data Dream Team

Take a collaborative approach to data and analytics.

Mon Jul 01 2024

You may be surprised to learn that the best data-informed insights to improve talent development don't come just from data and analytics experts. It often takes multiple perspectives and a team effort to ask the right questions, understand the meaning behind data, and take data-informed actions. Data and analytics in TD works best as a team sport. However, like any effective team, it requires some mutual understanding—shared data literacy as well as knowing each member's role in the team's data and analytics process. It also takes practice working together to develop as a data-informed TD team.

Data and analytics for corporate learning—or more simply, learning analytics—is a subset of people analytics that taps into the power of data to optimize learning, workforce development, collaboration, and business results. Broadly, people analytics includes talent intelligence as well as HR, performance, and learning analytics. Whether the analysis takes place via machine learning or with spreadsheets, the aim is the same: Increase performance, leadership, and well-being on the job using data-driven action.

"I need to get some of that," you may be saying to yourself—and you would not be alone. The US Bureau of Labor Statistics' Occupational Outlook Handbook predicts that employment of data scientists will grow 35 percent from 2022 to 2032.

However, before you rush to hire a data scientist, take a strategic, team-based approach.

What do data scientists do?

Data scientists typically have degrees in mathematics, statistics, or computer science. When they're working with an L&D team, they may be involved with:

Understanding learner behaviors. They analyze data from L&D platforms to understand how employees interact with materials and identify patterns that lead to successful learning outcomes.

Evaluating program effectiveness. They use statistical analysis, machine learning models, and experimental research to assess the effectiveness of training programs or learning technology, measuring organizational impacts and return on investment.

Using predictive analytics for learner success. They develop models to predict learner success and identify underperforming learners who may need additional support, enabling targeted behavioral and cognitive solutions.

Customizing learning experiences. They apply artificial intelligence to personalize learning experiences and adapt content and recommendations based on individual learner data, which enables human-machine collaborations and optimization.

Creating and validating metrics. They design and validate new metrics to accurately measure learning outcomes, engagement, and the overall impact of L&D initiatives. They also connect data points with organizational goals and report to leadership.

Although that may all sound good, hiring a lone data scientist is not going to solve all your L&D team's problems. That's because data science exists within the context of the business, the learning programs, and the evaluation strategy to take a holistic approach to solving problems using learning analytics.

A learning analytics strategy

In Measurement Demystified, David Vance and Peggy Parskey lay out a compelling case for measurement and analytics in the L&D space. They define analytics as "an in-depth exploration of the data, which may include advanced statistical techniques, such as regression, to extract insights from the data or discover relationships among measures." The L&D team, then, must put those insights into action via decision making (or at least recommending decisions). Otherwise, the analytics effort may appear to be much ado about nothing, and its momentum will suffer. A good learning analytics strategy is all about using data to make learning better.

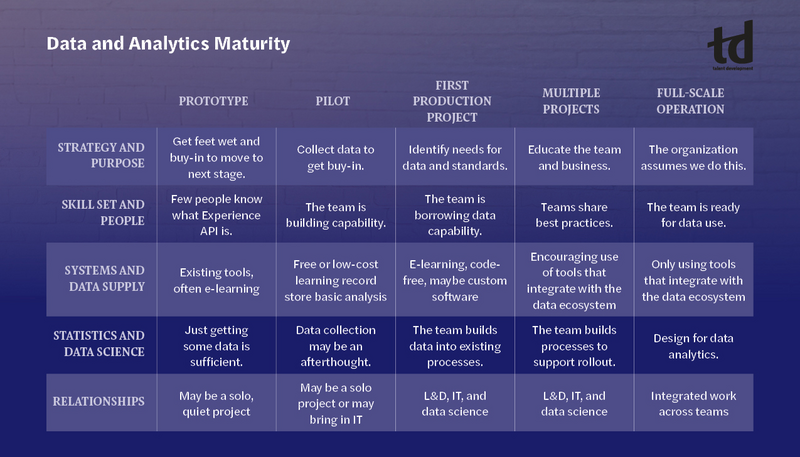

It's important to contextualize your learning analytics strategy to your company's overall data strategy and the maturity of your L&D measurement and data capabilities (see figure). An L&D team with less mature data skills in an organization without a strong data culture or supports will typically face steeper barriers than a team that has some momentum inside a data-driven organization that already has the infrastructure, expertise, and processes for analytics. At the same time, an L&D team with less mature data skills may find that they have more room to explore and less risk associated with any one project.

Regardless of where your L&D team is in its analytics maturity, to implement an effective strategy, bring together the team's skills; the organization's data infrastructure, statistics, and data science; and relationships with other functions and outside vendors.

Roles within a data-focused L&D team

When the team is small, a single person or a small number of people may cover each of the roles below. In larger organizations, each role may represent a larger team of specialists within each area. The list isn't meant to be prescriptive but rather informative of the variety of skill sets that a data-focused L&D team may need.

Learning designer or instructional designer. Both a consumer and a creator of data, a learning designer uses data to inform their work and instruments the learning experiences they create to generate the data that the rest of the team uses. (Instrumentation is the method for deciding what data to collect and then developing any necessary additional hardware, software, or processes for that data collection.)

The learning designer's role is to hold learners' needs at the center, as well as to help the rest of the team understand the data's nuances.

Data scientist. This person gathers data, cleans it in preparation for analysis, and analyzes it for insights and trends, checking in with the learning designer to confirm that the results are useful. Within the team, the data scientist can also educate their colleagues on data literacy, thus lifting the entire team's capabilities.

Business sponsor. The business sponsor helps the team align to the company strategy while serving as the executive on point for prioritizing projects, resourcing, and overcoming organizational barriers.

Developer. This role works closely with the learning designer to instrument the learning experiences to collect and send data in a useful format for the data scientist's analysis.

Learning platform manager. The administrator of the learning technology ecosystem—such as the learning management system, learning experience platform, learning record store, and various other content authoring and delivery tools—is an important role on the team because the integration of all the tools is necessary to quickly providing clean, relevant data for analysis.

Subject matter expert. SMEs work closely with learning designers to develop programs. SMEs' insights about their daily work behaviors and performance metrics are key to the overall team's effectiveness and business alignment.

AI ethics and bias manager. As L&D teams generate increasingly more data, they must become careful data stewards. Issues of data-collection ethics, equity in data use, data privacy and protection, and bias detection all fall under this role's purview—but are also the responsibility of every member of the team.

Change management specialist. The change management specialist plays a critical role on the team during two stages of maturity. Early on, as the team is gaining traction, change management support will properly position the team's role within the company and help others understand how the team operates. Once the team is operational and more mature, the decisions resulting from analyzed data may require skillful change management to implement.

Regarding the full, data-focused L&D team, a frequently asked question is: Where should it sit within the organizational structure? There are as many answers to that question as there are business structures.

For instance, a nationwide healthcare provider created a merged learning design and analytics team, bringing the analytics function adjacent to the instructional design team and under the same organizational unit. That is a powerful combination but risks leaving data scientists isolated from others within the company and a siloed technology infrastructure. A community of practice or other cross-departmental functional community can address that.

Another example involves a global furniture and home goods retailer that brought the learning technology and analytics team into the IT organization to serve as a center of excellence with pooled resources and technology infrastructure. The risks in such an approach are that the shared services analytics team is not as available to the learning team and does not have the functional depth to jump right into an instructional design project.

As a final example, consider a high-tech SaaS company that has a centralized data, analytics, and intelligence team within the sales enablement organization. The shared team works on a request basis using an intake process to prioritize and add new learning initiatives on which to analyze and report. Within the learning design organization, the centralized resource consults with various functions on measurement strategy and implementation through an intake process.

Getting to work

Regardless of where the data-focused L&D team sits within a company, its members will need numerous practices to be effective in their work together. A strict interpretation of the ADDIE model would mean that evaluation occurs at the end of the project. However, that could not be further from the truth when implementing an effective analytics strategy.

Alignment on measurement. Among the shared goals that the team pursues is the defined outcome measures for each project, likely including a combination of business outcome measures and learning activity and perception measures.

Process. Consider starting with an examination of the learning design process itself. How does the L&D team take into account data and analytics within the project intake? Project kickoff? Pilot testing? Release management? Each of those is an opportunity for learning designers to collaborate with the data scientists instead of waiting until the end. At each stage, the combined team can be asking:

What questions do we want data to answer?

Are we collecting the necessary data to answer those questions? (If not, what instrumentation do we need to add?)

What insights can we draw from the data we have to inform the next decision or iteration?

Collaboration. Build in opportunities and frameworks for collaboration throughout the project. Effective teams have project templates and a rolling quality assurance process, making for ideal opportunities to inject collaboration throughout the project.

Data governance and documentation. At every step as people make decisions about data, all team members have an opportunity to document those decisions and communicate them within the organization. Data governance is the set of policies and practices that a company uses to ensure quality, consistency, accessibility, and security of its data. For example, it may involve choosing and documenting which Experience API (xAPI) data profile to use for each component of a learning program.

AI. Machine learning algorithms help L&D teams and data science partners sift through vast amounts of learning data to identify patterns and predict outcomes, enhancing L&D's understanding of learner behaviors and the effectiveness of learning programs. In the process of that work, the combined team will need to follow both internal and external policies and guidelines.

The road ahead

It's easier than ever to collect data from learning experiences that can give L&D the greatest insights to optimize learning due to wide adoption of standards such as xAPI and its growing community. Likewise, AI-powered tools are making it simpler to make sense of and use that data. TD professionals must get engaged with data to drive decision making for better learning outcomes. The good news is that they don't need to do it all themselves.

Success in Using Learning Analytics

A US university's engineering school offers part-time graduate degrees, certificates, and nondegree programs for working professionals. The university structures the programs as massive open online courses (MOOCs) with set schedules. Courses typically run for 12 weeks and conclude with a proctored final exam. The online format enables students worldwide to enroll and participate. However, it also presents challenges in maintaining student interactivity and engagement within the courses. To address those issues, the university formed a team comprising instructional designers, data scientists, engineers, and doctoral researchers in education. The team focuses on enhancing instructor-student interactions and providing timely resources to keep students motivated.

Using learning analytics and data insights, the team has significantly improved the courses' instructional design and the overall student learning experience. Its efforts have led to developing a more interactive forum for quicker question-and-answer sessions and identifying key factors that contribute to student dropout. By reducing response times to student inquiries and adapting the curriculum based on analytics, the team has increased student satisfaction and decreased dropout rates across courses.

Learning analytics plays a critical role in improving learning outcomes and students' satisfaction. The university's successful application of learning analytics also inspired several other advanced analytical strategies that could apply to other MOOCs.

Predictive analytics for early identification of at-risk students

Purpose: Develop a predictive model to identify students at risk of dropping out or underperforming early in the program.

Analytical tools: Implement machine learning algorithms (such as logistic regression, decision trees, or neural networks) that use historical data to predict future performance and engagement levels.

Personalized learning pathways

Purpose: Create dynamic, adaptive learning pathways that adjust based on a learner's pace, performance, and preferences.

Analytical tools: Use artificial intelligence algorithms, specifically recommender systems, that analyze each student's interaction and performance data to suggest personalized content, resources, and remedial actions.

Content difficulty and engagement analysis

Purpose: Analyze learning content to identify sections that are either too challenging or not engaging enough, contributing to high dropout rates.

Analytical tools: Apply natural language processing and sentiment analysis to parse student feedback and discussions for insights into content difficulty and engagement. Clustering algorithms can also segment content into different difficulty levels based on student performance data.

Adaptive assessments

Purpose: Implement adaptive assessments that adjust the difficulty of questions in real time based on the student's performance.

Analytical tools: Use machine learning models that dynamically select question difficulty to match the learner's knowledge level, ensuring assessments are challenging but fair.

Feedback and support automation

Purpose: Automate personalized feedback and support messages based on student performance and engagement indicators.

Analytical tools: Leverage AI-driven chatbots and automated email systems that can provide instant feedback, answer common questions, and direct students to resources based on their specific needs and queries.